New Data Centre Cooling Technologies Go Green

Fri, 15 Feb 2013

data centres

From the perspective of both economics and the environment, data centre cooling has a somewhat chequered history. In an ideal world, all energy into the data centre would be used to power equipment with no overheads such as cooling, lighting, wastage, ancillary circuits. However, at a typical data centre facility, the majority of energy expended that is not used to power the IT load is used to keep the server rooms cool. A ratio known as Power Usage Effectiveness (PUE) is a useful metric to gauge the efficiency of a facility:

PUE = (all energy consumed the facility) divided by (the energy used by the IT equipment)

Therefore a PUE of 1.89 means that for every 1.89 watts into the utility meter, only 1 watt is delivered out to the IT load. The other 0.89 watts are undesirable overheads. In this example, servicing the data centre, including keeping it cool, requires almost as much power as the IT load itself. The ideal PUE is 1.0 - where all energy expended goes into powering the IT equipment.

Lowering a PUE means reducing power and cost expenditure, with the positive side effect of being much kinder to the environment.

Recent developments in cooling technologies are now beginning to offer extremely low PUE ratings, including two ConnetU facilities in Surrey and central London, each reporting a PUE of around 1.15 and 1.06 respectively. This is contrasted with a global average of 2.5 in 2009 (Uptime Institute).

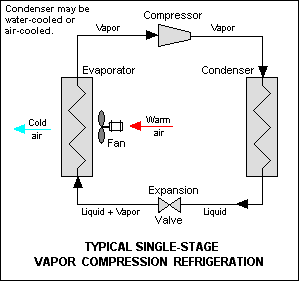

But before we look closer at these new systems let's review the basics of refrigeration, the traditional data centre cooling technology. To understand this technology, we need to be familiar with what in engineering is called the Vapour Compression Cycle.

What is described here is a cooling action that occurs when liquid under high pressure is released into a low pressure environment and evaporates. This process draws in warm or hot air leaving cold air in its place. These traditional systems are known as DX or Direct Expansion systems.

The use of a compressor to place gas under high pressure demands a high expenditure electricity directly from the grid

Importantly, the use of a compressor to place gas under high pressure demands a high expenditure of electricity directly from the grid. The diagram below shows how traditional DX CRAC (Computer Room Air Conditioner) systems work, with high pressure liquid passing into low pressure in the evaporator coils.

In countries, such as the UK, where the climate is often cold, it is desirable to blow cold air from the outside through the facility without expending energy on compressive refrigerative cooling. This free cooling approach requires almost no additional energy input, and therefore significantly reduces PUE.

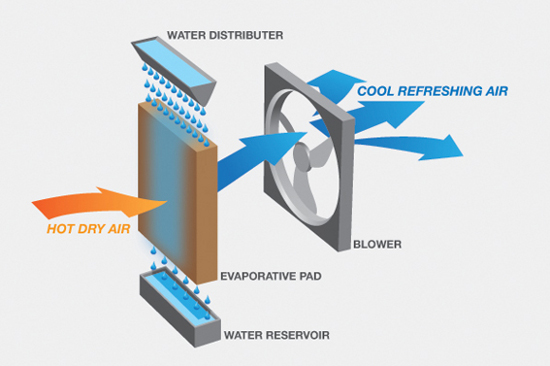

The simple concept of free cooling brings us to the revolutionary data centre cooling technology: CREC (Computer Room Evaporative Cooling), which uses outside air at the ambient temperature, which when hot is combined with the chilling effects of water to reduce the air temperature. Air is sucked in from outside and pushed through mesh pads that are saturated with water; the water removes heat from the air, in a similar way to sweat removing heat from the skin. The cold air is then filtered and regulated before entering the data hall where it is circulated before finally being drawn out of the building:

Without the need for complex DX mechanisms, running costs can be lowered by up to a factor of 10. The simplicity of design also means low maintenance. It is safe to say that new build data centres will be keen to tap into the savings offered by evaporative cooling technology.

A typical wall-mounted Evaporative cooling unit

Crossovers between the various platforms are also available, with free cooling also an option for evaporative systems as it is for the CRAH systems. Some CREC systems even employ backup or failover DX capacity as an insurance policy.

Whilst the advantages of evaporate cooling are clear, unfortunately we may not be seeing more of it at older data centres as retrofitting is often an expensive or impractical option.

Come join us on: