London colocation, considerations for your business

Wed, 12 Dec 2012

data centres, colocation

There are a number of reasons for London businesses to consider moving their IT equipment to colocation in a commercial data centre. In this blog, we have narrowed down an initial assessment to five key areas.

Power: First and foremost, power is one of the two key costs factored in to colocation contracts (bandwidth being the other; U space is less of an issue but still important). Power draw, however, really dictates how much you’re going to spend. Whilst the power charge at a data centre might initially appear expensive, it’s actually very cost-effective as it includes power continuity provided by industrial uninterruptible power supply (UPS) systems and backup generators as well as highly available cooling. Typical office UPS systems pale by comparison, if they exist at all.

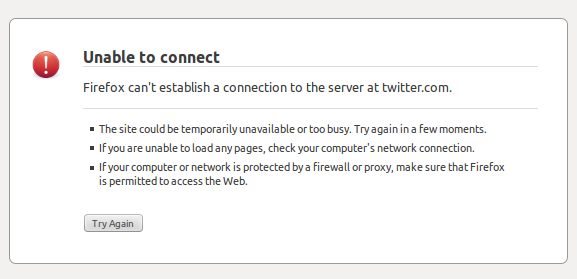

Connectivity: However you look at it, running production services, for example, file and media servers, email and RDP servers for remote employees, not to mention backup systems, all out of a down-town office is going to be expensive, and, some may say, a little reckless. Regardless of the SLAs in place on a costly leased line, Internet connectivity to the office is comparatively unreliable: typically a single circuit providing no redundancy and no diversity. The office is reliant on a single physical connection, a clear single point of failure (SPoF) that could stretch right out onto the public highway and beyond, making it vulnerable to any number of disconnection events, from legal or illegal road and pavement ‘maintenance’, to traffic accidents and vandalism that takes out roadside comms boxes.

Further, the latency is almost always higher on an office circuit than a data centre where sophisticated transmission technology and a wide choice of carriers are available. Whilst prices are coming down, a single office connection will never compete with the transmission infrastructure of a modern data centre.

By contrast, data centres and professional network operators connect their clients to diverse fibre transmission circuits and a fully redundant network topology that can extend all the way to dual server NIC ports, if required. With multiple routing, switching and Internet carrier options available over which traffic can pass, congestion and network down time can be reduced to near-zero.

Cooling: This often accounts for a large power overhead in the data centre. Failure of cooling systems alone will soon result in over-heating on the data floor. One by one, system thermostats will activate resulting in systems shutting down. A high weekend load during warm weather is a typical scenario in which an office based computer room heats up resulting in systems going off-line.

Built on the redundancy platform of N+X UPS and N+1 diesel generators, Computer Room Air-Conditioner (CRAC) systems in Tier III facilities and above, are redundant in themselves, meaning they come in N+1 deployments. The industrial scale, N+1 deployment and power resilience designed into data centre cooling again is paid for by economies of scale; even a client with very small deployment drawing only a few Amps, benefits from grade A infrastructure.

Fire Suppression: A computer room in an office building is rarely designed for the role it plays. Computer circuitry and PSUs can short causing fires that need to be contained immediately. If the only system available is a traditional sprinkler, you can say goodbye to your hardware, and more importantly, the data being stored or used.

If there is no system for fire suppression, the results can be far worse, with a serious risk to the building as a whole along with its occupants. Data centres employ state-of-the-art fire detection systems that use inert gasses or fine mist to suffocate fires with little or no effect on electronic equipment. The latest systems can sense the location of heat and fire, restricting fire suppression to that area.

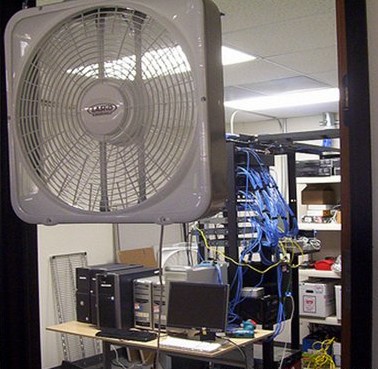

Tech support: Finally, there is staffing. Configurations can take place from anywhere on the planet, but from time to time, a pair of hands is required to be able to perform certain tasks such as rebooting a system, racking a server or patching a cable and setting initial connectivity. Providing staffing at the office during office hours may not be an issue but running to the office in the middle of the night or over the weekend is never a pleasant experience. Having qualified and dedicated staff that can respond 24×7 makes life a lot easier, allowing you those precious moments to switch off. Having technically aware and capable staff at the data centre is as important as keeping non-technical staff well away from your equipment. As can be seen in the image below, providing access to the same power sockets for both servers and vacuum cleaners is simply asking for trouble:

The tangible and intangible benefits of colocation to businesses in a dense metropolitan area such a London cannot be underestimated.

Here is a list of all too common reasons for office based failure; As a kind of do it yourself risk analysis, ask yourself, is your equipment exposed to:

Over heating due to:

- an unusually hot summer day

- failure of air-conditioning unit due to lack of maintenance on unit, unit age etc.

- heating accidentally turned on in computer room

- miscalculation of cooling requirement during high hardware demands

- all to common combination of any of the above

Fire damage due to:

- fusing/shorting of old components, commonly PSUs

- lack of suitable fire suppression systems

- other unrelated activity, caused by buildings with multi-purpose uses and facilities

Flooding and liquid damage due to:

- building malfunction, pipes, roofing, radiators

- extreme weather

- water fed sprinkler systems (as a result of fire)

- cleaner error, upturned bucket, wrong cleaning methods

- misplaced staff drinks

Power outage due to:

- local area black/brown out

- building power malfunction

- misplaced and consequently unpaid electricity bill

- office or cleaning staff accidentally turning off or unplugging kit

Network outage due to:

- single carrier network failure

- failure of leased line

- failure of on-site networking equipment

Tech Support failure, staff unable to access equipment in emergency due to:

- physical access blocked, especially over the weekend, double locked doors etc.

- physical access blocked due to weather / transportation conditions, wrong kind of snow, transport failure

- no staff available or aware of the situation for various reasons, holiday, phone off etc.

Murphy’s law, due to:

- if it can go wrong, it will.

Come join us on: