What is The Cloud?

Mon, 14 Jan 2013

cloud hosting

The much used term Cloud computing, or The Cloud, has uncertain origins. Cloud-shaped symbols have traditionally been used in computer network and telecoms diagrams to symbolise complex infrastructure that, for the purposes of the diagram, we don’t care about in detail… reliable services in the “ether” for which the technical blueprint is irrelevant to us.

Contemporary definitions of the term tend to refer not only to the network, but also to a pool of computing resources that are made available as a metered utility – like electricity and gas supply, you only use what you need and only pay for what you use. Technically these services could offer anything and be constructed using any infrastructure, for example: a VoIP phone service on a single SIP gateway, accounts management software on a dedicated server, or a rack of dedicated servers available for clients to contract as they require.

However, this utility supply of computing power has been made practical only with virtualisation through the use of specialised hypervisor software. Hypervisor software runs like an operating system on the underlying server hardware and creates abstractions of it, enabling physical servers to be divided into many virtual machines which each act as host to separate operating systems or guests. The advantage of the software layer is extensive control over how these guest servers can be created and managed – permanently eliminating the requirement for a technician to do a hard reboot or for an expensive hardware out of band KVMoIP solution to be provisioned for each server. Further, provisioning can be fully automated as there is no physical aspect to the build process which require staff to assemble components and install operating systems, making building new servers inexpensive and fast.

The virtualised nature of cloud offers further advantages: as virtual machines run as abstracted hardware, they can be easily moved between physical machines without any changes to the operating system or installed software. So if a machine needs to be powered off for maintenance, the guest virtual machines running on it can be migrated to another machine, reducing or even eliminating downtime; even better, this can be done automatically if a piece of hardware unexpectedly fails – giving high availability and portability (moving to other hardware or environments).

Resizing guests is fast – usually just a quick reboot is needed to expand or contract the RAM and CPU resources available – and cloning virtual machines is easy making it painless to roll out copies of an application quickly – no need to install operating systems, copy configuration and application files, and re-configure databases. This gives both vertical and horizontal scalability as an IT manager can quickly grow an application’s resources and the number of copies used.

A separation or abstraction of hosting software from underlying hardware offers many clear advantages in terms of system portability, scalability and high-availabilty

The emergence of virtualisation technologies enabled the efficient production of metered utility computing (or “pay as you go”) services. In turn, the development of virtualisation was driven by outstanding advances in technology.

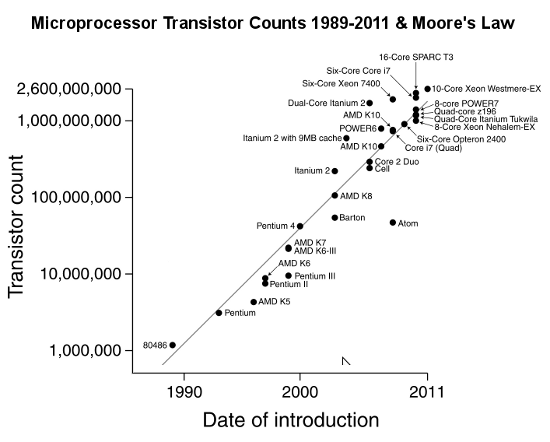

As seen in the diagram below, advances and CPU technology over the past decade have resulted in huge increases in computing power in line with Moore’s Law, which states that the number of transistors on integrated circuits doubles approximately every two years.

Whilst running costs for servers have increased (e.g. national grid power and generator fuel), the cost of server resources has fallen. For example:

- In 2003, AMD launched the Opteron 140 – a single core at $229 ($229 per core)

- In 2006, the AMD Opteron 165 was announced – a dual core at $417 ($208 per core)

- In 2012, AMD released Opteron 6376 – 16-cores at $703 ($44 per core)

In the last half decade, CPU price per core has fallen by nearly 80%. RAM prices have also decreased. This incentive to purchase more powerful servers has led to many being heavily underutilised, and therefore wasteful of both capital and running costs. Virtualisation technology has seen entire racks of servers condensed into only a handful of high specification machines, considerably reducing capital expenditure (capex), maintenance and power costs. This has become a financial necessity for many.

Today, successful Cloud execution relies on powerful and stable virtualisation and storage platforms that are capable of aggregating servers and their resources and deploying multiple (virtual) compute resources: CPU, memory, storage, Internet bandwidth. Infrastructure providers supply the hardware and virtualisation resources so developers and IT managers know they can demand resources whenever required without having to set aside extensive capex or accommodate lengthy build times.

The Cloud has the power to revolutionise the way we all think about data centre services – we’ve seen many examples of this in business and government already – and ultimately for the industry to become a greener, more efficient, less wasteful outsourced IT service.

Come join us on: